The slowdown of transistor scaling coupled with the surging democratization of AI has spurred the rise of application-driven architectures to continue delivering gains in performance and energy efficiency. My PhD work has developed domain-specific hardware architectures for natural language processing (NLP) and AI training workloads as summarized below.

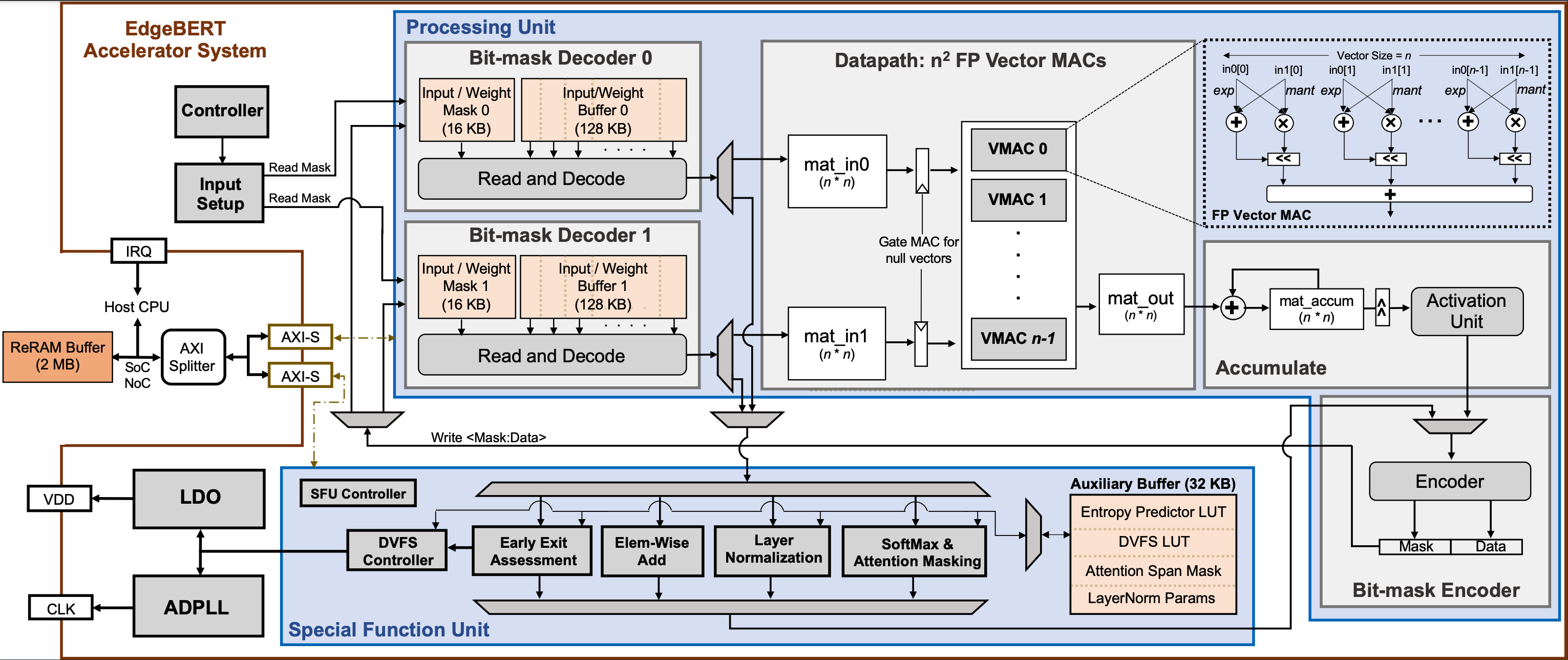

1) EdgeBERT is an algorithm-hardware co-design framework multi-task NLP inference. The EdgeBERT accelerator tailors its latency and energy consumption via entropy-based early exit and dynamic voltage-frequency scaling (DVFS). Computation and memory footprint overheads are further alleviated by employing a calibrated combination of adaptive attention span, selective network pruning, and floating-point quantization. EdgeBERT source code is open-sourced here.

The EdgeBERT hardware accelerator system highlighting its processing and special function units. A fast-switching LDO and fast-locking ADPLL are also integrated for latency-driven DVFS.

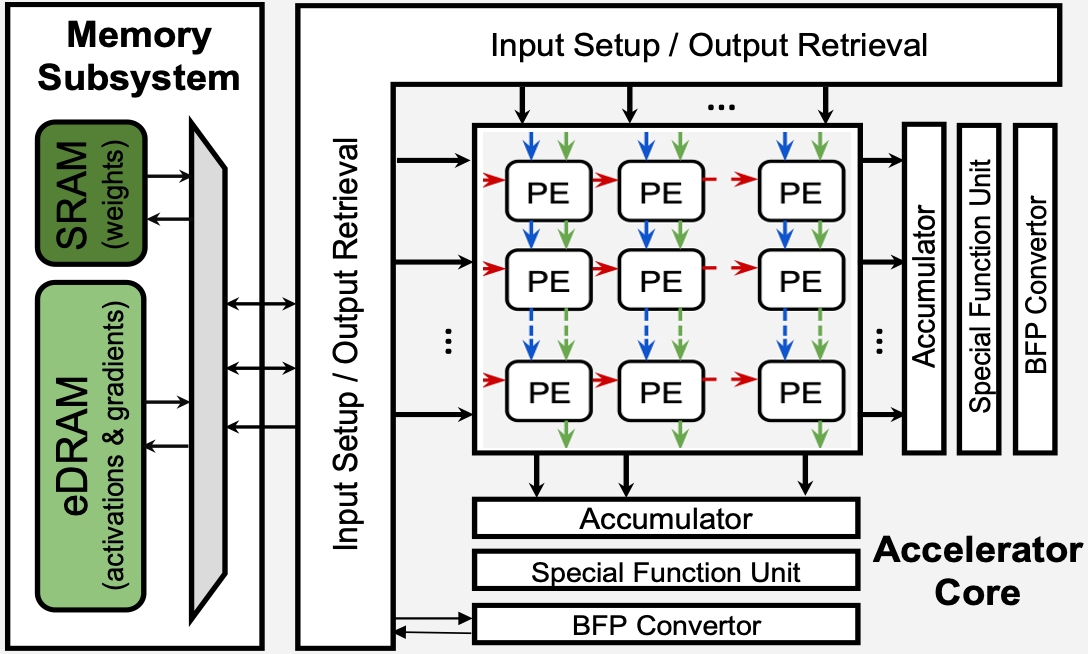

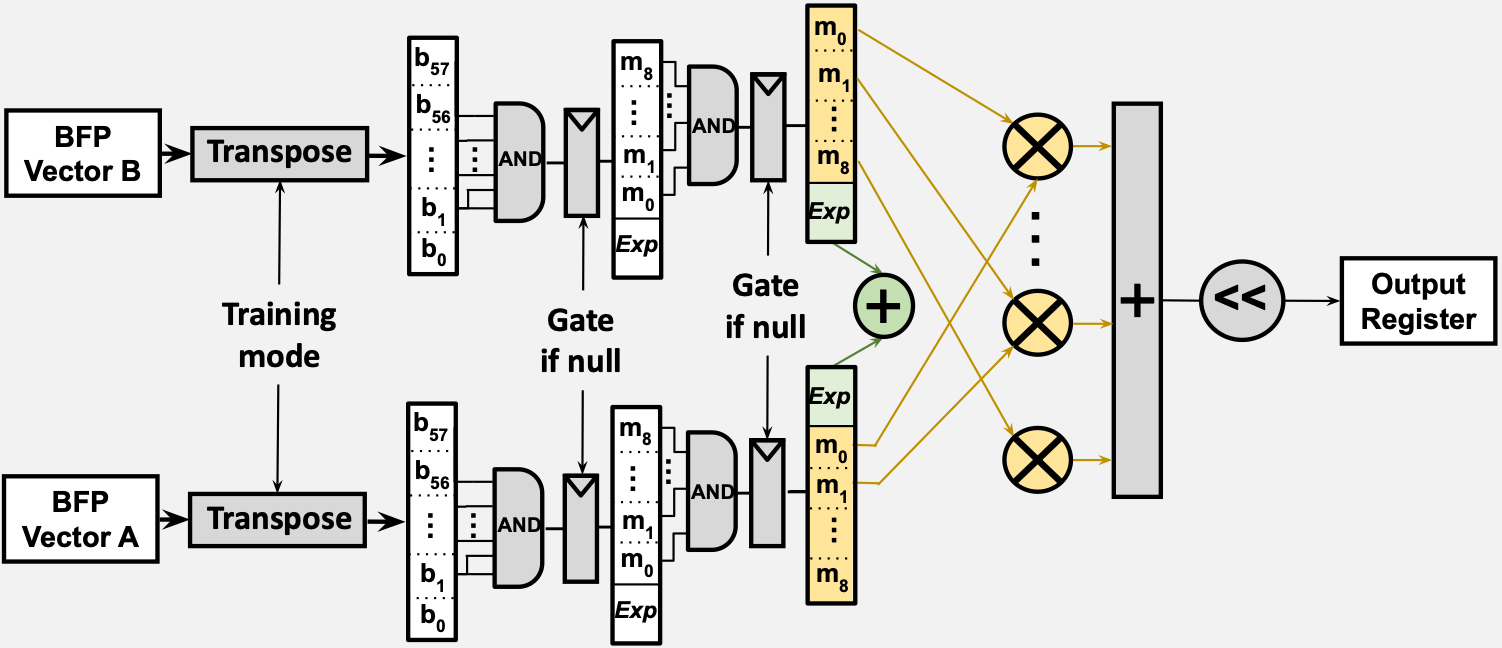

2) CAMEL is a model-architecture co-design framework for on-device machine learning training using embedded DRAMs. The specialized accelertor consists of a systolic array core and a hybrid eDRAM-SRAM memory subsystem as illustrated below. The reconfigurable systolic array core utilizes block floating-point (BFP) -based processing elements.

CAMEL ML training accelerator.

Block floating-point -based processing element.

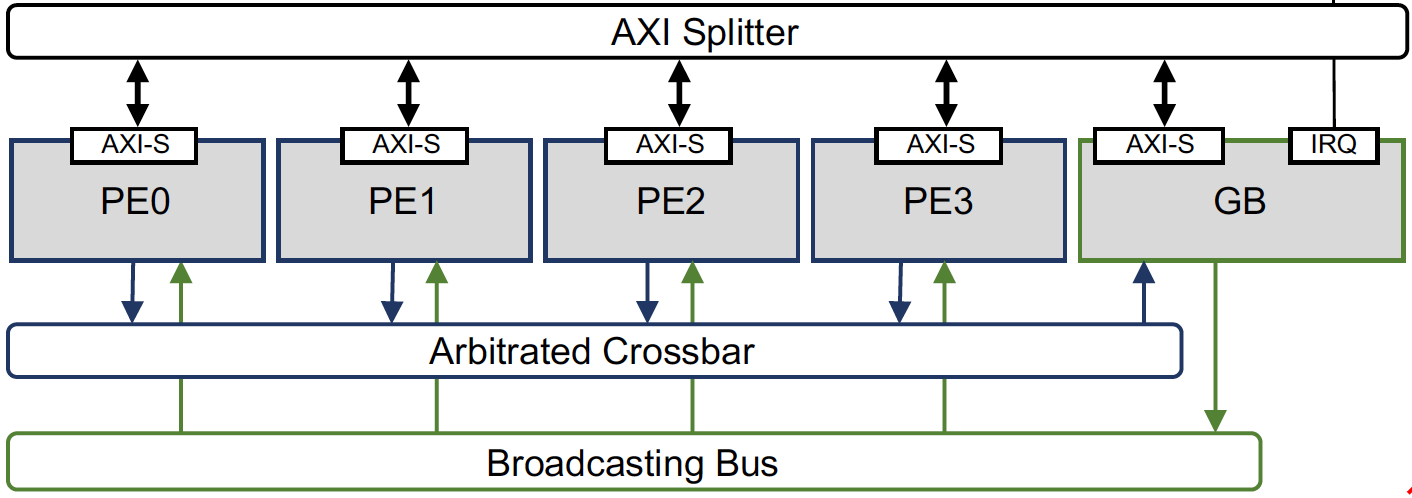

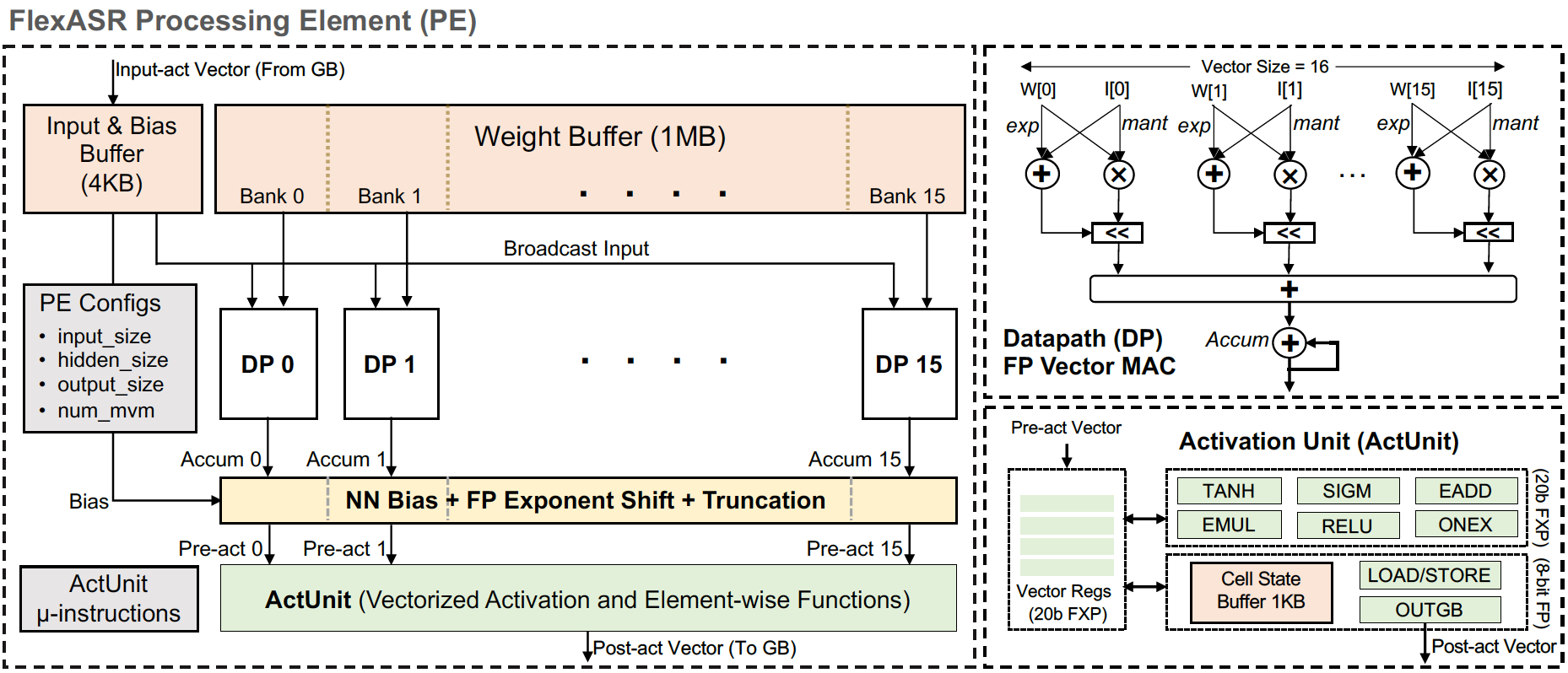

3) FlexASR is a hardware accelerator with AdaptivFloat-based processing elements, optimized for attention-based recurrent neural networks used in speech and machine translation AI workloads. FlexASR is open-sourced here.

Top-level FlexASR accelerator.

AdaptivFloat-based processing element.